Redefining Language Model interactions through DSPy: Evolving declarative LM calls into dynamic, self-improving pipelines.

Published:

DSPy Overview:

Arxiv Paper Accepted to the Twelfth International Conference on Learning Representations (ICLR 2024) Accepted to the NeurIPS 2023 Workshop R0-FoMo: Robustness of Few-shot and Zero-shot Learning in Large Foundation Models

Recent advancements in LMs are paving the way for an explosion in prompting techniques, finetuning, and adaptive reasoning. Despite rapid progress, LMs are sensitive to how they are prompted. Currently, we rely heavily on hand-crafted ‘prompt templates’—a practice that’s error-prone, often verbose, and doesn’t always generalize.

Introducing the DSPy Programming Model: the framework to solve advanced tasks with Language Models. Think Pythonic control flow for any pipeline—clean, efficient, powerful.

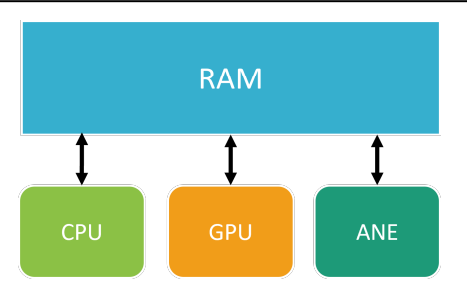

DSPy unifies prompting, finetuning, reasoning, and retrieval augmentation—offering significant enhancements for your LM pipelines. Just like how you handle layers in PyTorch, with DSPy, you declare the modules, what they’re supposed to do and call them in any control flow - loops, if statements, and more.

Three Pillars of DSPy: Signatures, Modules, Teleprompters

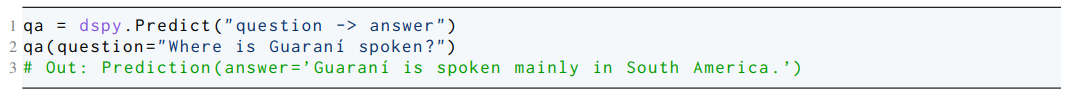

With DSPy, you aren’t restricted to free-form string prompts with the use of natural-language typed signatures. More formally, a DSPy signature is a tuple of input & output fields (sometimes with instructions). What’s amazing is the fact that DSPy infers roles based on these field names and optimizes accordingly, allowing users to define the ‘what’ of a task, like “consume questions and return answers,” without worrying about the ‘how’.

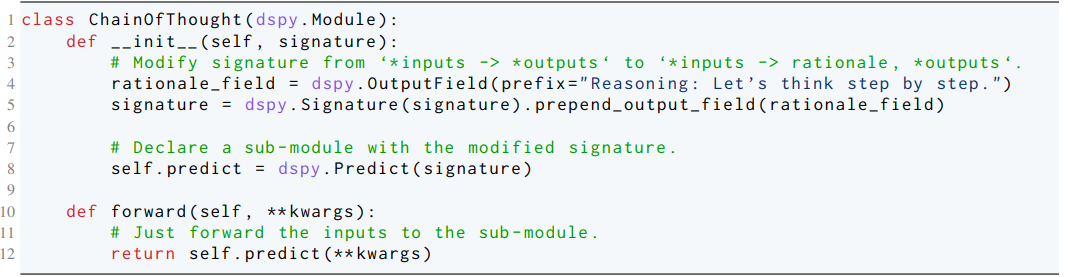

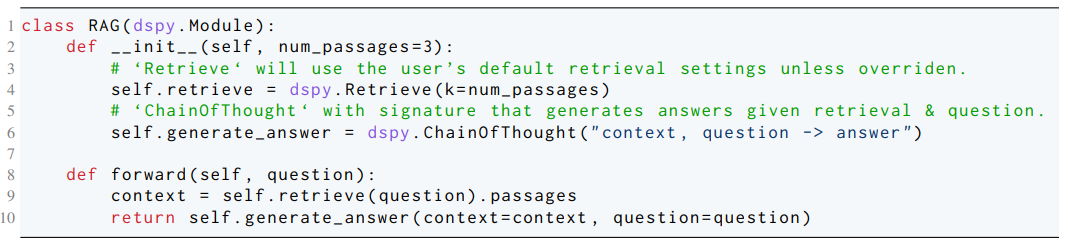

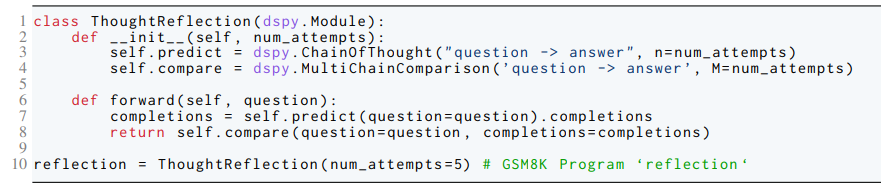

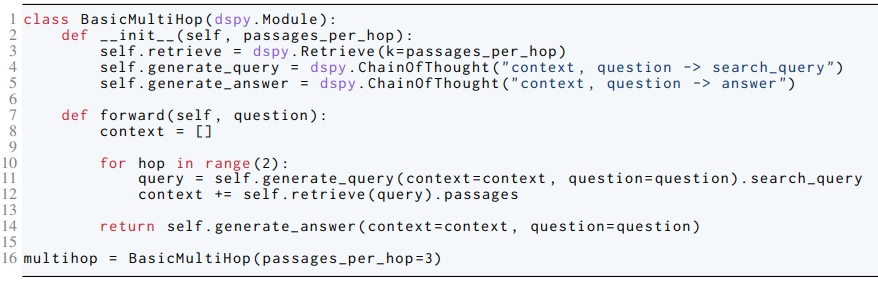

Once a signature is defined, modules can be declared with that signature, translating them into callable functions. DSPy’s core Predict module works with provided signatures, making LM calls, crafting prompts, and decoding outputs. Additionally, DSPy supports more sophisticated modules like ChainOfThought, ProgramOfThought, MultiChainComparison, and ReAct elegantly composed with just a few lines of code.

DSPy modules are analogous to neural network layers in Pytorch within LM pipelines but with the added flexibility of being parameterized for adaptability. This allows for customization based on the chosen LM, prompt guidelines, and demonstrations either as prompts or training data. Using DSPy’s ‘define-by-run’ methodology, users declare modules and then construct the desired pipeline. Example:

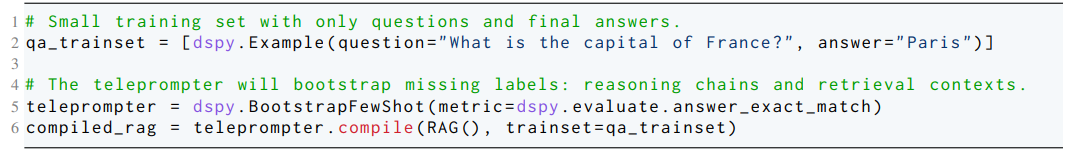

In DSPy, teleprompters act as optimizers, refining programs using training sets and chosen metrics. For instance, the BootstrapFewShot teleprompter simulates the RAG program on given training samples while gathering demonstrations for each module, ensuring the output aligns with defined signatures and metrics.

To further boost performance, DSPy introduces compilation to improve program performance through enhanced prompting or finetuning. During this phase, each Predict module within the program produces mock tests to identify the most effective demonstrations for the task. Optimization tools like BootstrapFewShotWithRandomSearch and BootstrapFewShotWithOptuna fine-tune this process, while BootstrapFinetune sharpens the LM for specific tasks. With teleprompters, DSPy turns rough draft programs into efficient solvers.

Well, this is smooth but how does DSPy perform on real-world data? We evaluated programs using DSPy modules and teleprompters on the GSM8k dataset with samples of 200 and 300 Q&A pairs for training and development respectively, and 1.3k official test set examples. Notably, leveraging ensemble optimizations within CoT attained 81.6% with the GPT-3.5 language model. We also introduced ThoughtReflection, a multi-stage ComparerOfThoughts module, which achieved 46.9% using the Llama2-13b-chat language model and the ensemble optimization techniques.

Diving deeper, we assessed multi-hop question answering using the HotPotQA dataset in the open-domain “fullwiki” context. With the help of ColBERTv2 for retrieval on Wikipedia’s 2017 “abstracts”, our tests comprised the official validation set, extracting 1k samples. We assessed CoT-RAG, ReAct, and our Basic Multihop program. With ensemble optimizations, the Basic Multihop program excelled, achieving 45.6% with GPT-3.5 and 41.0% with Llama2-13b-chat in exact answer matching.

So as we continue to embark into the future of language models, DSPy signals a new era of interacting with LMs—ensuring that we aren’t just speaking the language, but are masterfully composing it.

You can try DSPy in a free Google Colab notebook.

You don’t need an API key to get started. And we host two Wikipedia indexes (w/ ColBERTv2) to help you explore retrieval-based tasks.

Further Reading

To dive deeper into DSPy, refer to our paper, GitHub repository, and Twitter thread