Introducing Model Training on the Edge with PORT: Training on edge devices with unified-memory.

Published:

PORT Overview:

Under review.

As the digital landscape shifts to decentralization, everyday usage devices can serve as more than just common applications we use for daily tasks. This broadly describes the field of edge computing, or using these everyday ‘edge devices’ to serve as computational devices to execute machine learning tasks. Rather than relying on distant cloud servers, edge computing can bring the ability to run and even train models directly with greater proximity to the data source hosted on these localized devices.

Training on the edge represents the next step in making AI more integrated and adaptable to real-time processing. Utilizing devices in dynamic environments such as environmental analysis of remote surroundings or wearable devices that can diagnose health conditions requires real-time updates. Edge computing ensures operational functionality during offline settings, quick response times and processing, and real-time model updates, but also reflects a localized environment that minimizes the greatly-apparent risks of data breaches. With the emergence of data privacy and security concerns with sending and retrieving data from the cloud, the importance of keeping data within local ecosystems cannot be overstated, and on-device AI training offers the potential to provide a more trust-centric digital ecosystem.

However, utilizing edge devices is not without its challenges. With a localized environment, edge devices have significantly less computational power and limited memory constraints than these robust cloud servers.

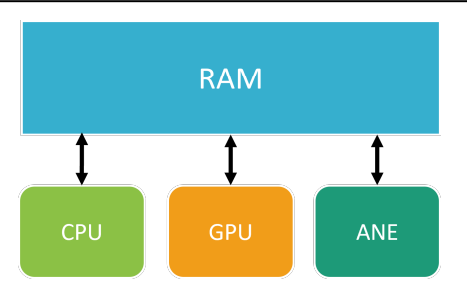

Introducing PORT - a solution designed to tackle the challenges of training memory-intensive models on memory-constrained edge devices. The solver serves as an accuracy-preserving algorithm that transforms training into an Integer Linear Programming problem. By doing so, PORT can schedule each neural network layer optimally across single or multi-accelerator platforms. By profiling each operator’s characteristics, PORT can make informed decisions on which accelerator to use for each task, be it a CPU, GPU, or any other available accelerator. PORT hence accounts for the device’s and model’s respective constraints and tailors its optimization to best suit these conditions.

Additionally, PORT encapsulates network optimizations specifically designed for reducing network training runtime while efficiently utilizing memory during computations. These optimizations include In-Place Convolution, which directly updates output tensors to minimize memory allocation; Patch-Based Inference, dividing data from batches to patches for lower memory consumption per convolution; Im2Col, transforming convolutional inputs for efficient matrix multiplications; Loop Reordering, realigning layers to optimize memory accesses and Operator Fusion to reduce intermediate layer operations to enhance computational speed.

PORT was tested on a variety of different models and edge devices, including both embedded and consumer-available edge devices. The embedded devices included ARM Cortex class devices like the M0, M4F, and Raspberry Pi 4B+ while more mainstream edge devices included the Nvidia Jetson Orin and the iPhone 13 and 15 Pro Max. Benchmark models covered ResNet and VGG models on the CIFAR image dataset while also including transformer models like BERT. With detailed profiling of the device accelerators, particularly taking advantage of the unified memory architecture of the edge devices, we tested PORT over its training runtime and memory consumption compared to baseline evaluation methods.

Looking ahead, we aim to expand PORT’s applicability to more recent edge devices featuring unified memory architectures like the Apple M-series Macbooks and iPhones alongside testing on LLMs like Llama2, GPT variants, and image generation models like Stable Diffusion. PORT’s potential to bring ML capabilities to edge computing is vast, and with open-source development, PORT will be further refined to handle novel tasks in memory-constrained environments. PORT represents a significant step in the direction of a more decentralized digital ecosystem, paving the way for a future where AI is seamlessly integrated within localized environments on devices at the edge of our fingertips.

Further Reading

To dive deeper into PORT, its formulation and its evaluation results, refer to the paper.